As the world increasingly relies on AI-driven solutions, staying ahead of the regulations that govern these technologies is not just a necessity — it’s a responsibility.

In June 2024, the European Union passed the world’s first comprehensive regulation governing artificial intelligence — the AI EU Act. This legislation sets out a clear framework for how AI systems should be developed, deployed, and used across a wide range of industries. As AI continues to shape the world we live in, understanding this Act is essential for businesses, public communicators, and developers alike.

Here is what you need to know:

Why the AI Act is important

At its core, the EU AI Act aims to create a regulatory standard for AI within the European Union and beyond. The Act does not just apply to EU-based companies; it also affects any organization that deploys AI systems impacting people within the EU, regardless of where they are located.

To fully grasp the reach of this regulation, it is important to understand who it applies to — the providers creating these AI systems and the deployers who use them. A provider refers to anyone who develops or puts an AI system on the market under their name, whether for free or for payment. This includes developing the technology itself or having it developed on your behalf. Meanwhile, a deployer is an individual or organization that uses the AI system in practice. If you’re using AI under your authority in any professional capacity, you’re a deployer.

AI systems are increasingly embedded in our daily lives so understanding the responsibilities of both parties is crucial. By setting these global standards, the Act addresses key concerns about the risks associated with AI technologies.

The EU is sending a clear message: while innovation is essential, so is protecting the rights, safety, and well-being of individuals.

Key provisions of the AI Act

Some of the main provisions set out by the European Commission aim to regulate AI based on its risk level, ensure transparency in its use, and require ongoing monitoring after deployment. Let’s explore how these provisions shape our AI reality:

1. Risk-based approach

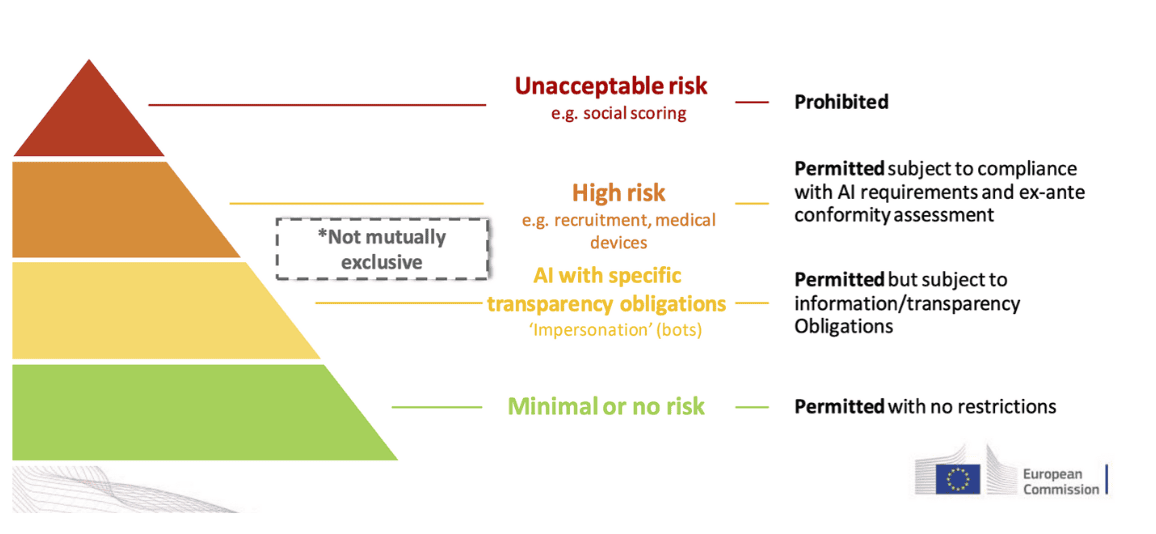

One of the most important aspects of the AI Act is its classification of AI systems based on risk. The Act divides AI systems into four categories, each with its own regulatory obligations:

The Pyramid Graphic was designed by the European Commission

- Unacceptable risk

AI systems that are seen as a direct threat to people are classified as unacceptable risks and are, therefore, outright banned. For example, systems that manipulate vulnerable groups or use facial recognition in public spaces fall under this group.

- High risk

AI systems that affect safety or fundamental rights — like those used in hiring processes, loan approvals, education, and law enforcement — fall into the high-risk category. They require regular assessments and ongoing compliance throughout their lifecycle.

- Limited risk (or AI with specific transparency obligations)

Limited risk AI systems face fewer requirements but must follow transparency obligations. Examples of such systems include chatbots and emotion recognition systems. These technologies must disclose that they are AI-powered when interacting with users.

- Low/Minimal risk

AI systems with minimal risk have little to no additional legal obligations, but adopting voluntary codes of conduct is encouraged to promote ethical AI use. AI systems that belong to the minimal risk category include applications such as AI-enabled video games and spam filters.

2. Transparency requirements

Transparency is at the heart of the EU AI Act. Providers and deployers of AI systems must disclose when AI is being used in interactions with users. Providers must clearly label AI-generated content as artificially created or altered in a machine-readable format. This is essential to prevent misinformation and ensure users are aware when they are engaging with AI-driven material. Similarly, if an AI system interacts with people, the user must be informed.

3. Market control and post-market monitoring

Even after AI systems hit the market, they are not free from scrutiny. Providers of high-risk AI systems must set up post-market monitoring systems to track and document any potential risks or issues. They are required to report serious incidents to authorities within strict timeframes.

National market surveillance authorities will ensure ongoing compliance, while the European Commission will keep a public database of high-risk AI systems for an additional layer of transparency.

Key criticisms and limitations of the AI Act

Despite the Act’s ambition, critics argue that it leaves some gaps and may either over- or under-regulate certain AI technologies.

1. Rushed compromises

Some experts believe that the EU AI Act was developed too quickly, leading to significant compromises. For instance, the Act requires AI-generated content like deepfakes to be labeled, but critics say this isn’t enough. They argue it doesn’t fully tackle issues like voter manipulation or misuse of images without consent. A stronger approach may be needed to handle these risks effectively.

2. Over- and under-regulation risks

The Act’s risk-based system, though logical in theory, may result in both over- and under-regulation. For example, autonomous delivery robots in controlled environments are considered high-risk, even though they pose minimal danger. On the other hand, AI systems used for migration predictions or detecting money laundering, which carry a high risk of discrimination, are not classified as high-risk and, therefore, don’t have strict requirements.

3. Challenges to innovation and the ‘Brussels Effect’

The Act’s strict rules could slow down innovation in some sectors. It may also weaken the “Brussels Effect,” where EU regulations often set global standards. While the EU seeks to lead in AI regulation, other regions might adopt more flexible rules and potentially reduce the EU’s influence globally.

What’s next for communicators

For communicators, the EU AI Act introduces some key challenges and responsibilities. In our next blog, we’ll take a deeper dive into the specific chapters of the EU AI Act that are most relevant for communication professionals. We’ll explore actionable insights and best practices to ensure you’re fully compliant and ahead of the curve.

Stay tuned as we navigate the evolving AI landscape, helping you stay sharp, informed, and ready for what’s next.

Got questions? Leave a comment, and let’s keep the discussion going. The future of AI in communications is here — let’s navigate it with confidence.